Learn About Transformers: A Recipe

A recipe to learn about the world of Transformers used in machine learning.

Transformers have accelerated the development of new techniques and models for natural language processing (NLP) tasks. While it has mostly been used for NLP tasks, it is now seeing heavy adoption to address computer vision tasks. That makes it a very important technique to understand and be able to apply.

I am aware that a lot of machine learning and NLP students and practitioners are keen on learning about transformers. Therefore, I am motivated to prepare and maintain a recipe of resources and study materials to help students learn about the world of Transformers.

To begin with, in this post (originally a Twitter thread), I have prepared a few links to materials that I used to better understand and implement transformer models from scratch.

The reason for this post is so that I have an easy way to continue to update the study material.

🧠 High-level Introduction

First, try to get a very high-level introduction about transformers. Some references worth looking at:

🔗 https://theaisummer.com/transformer/

🔗 https://hannes-stark.com/assets/transformer_survey.pdf

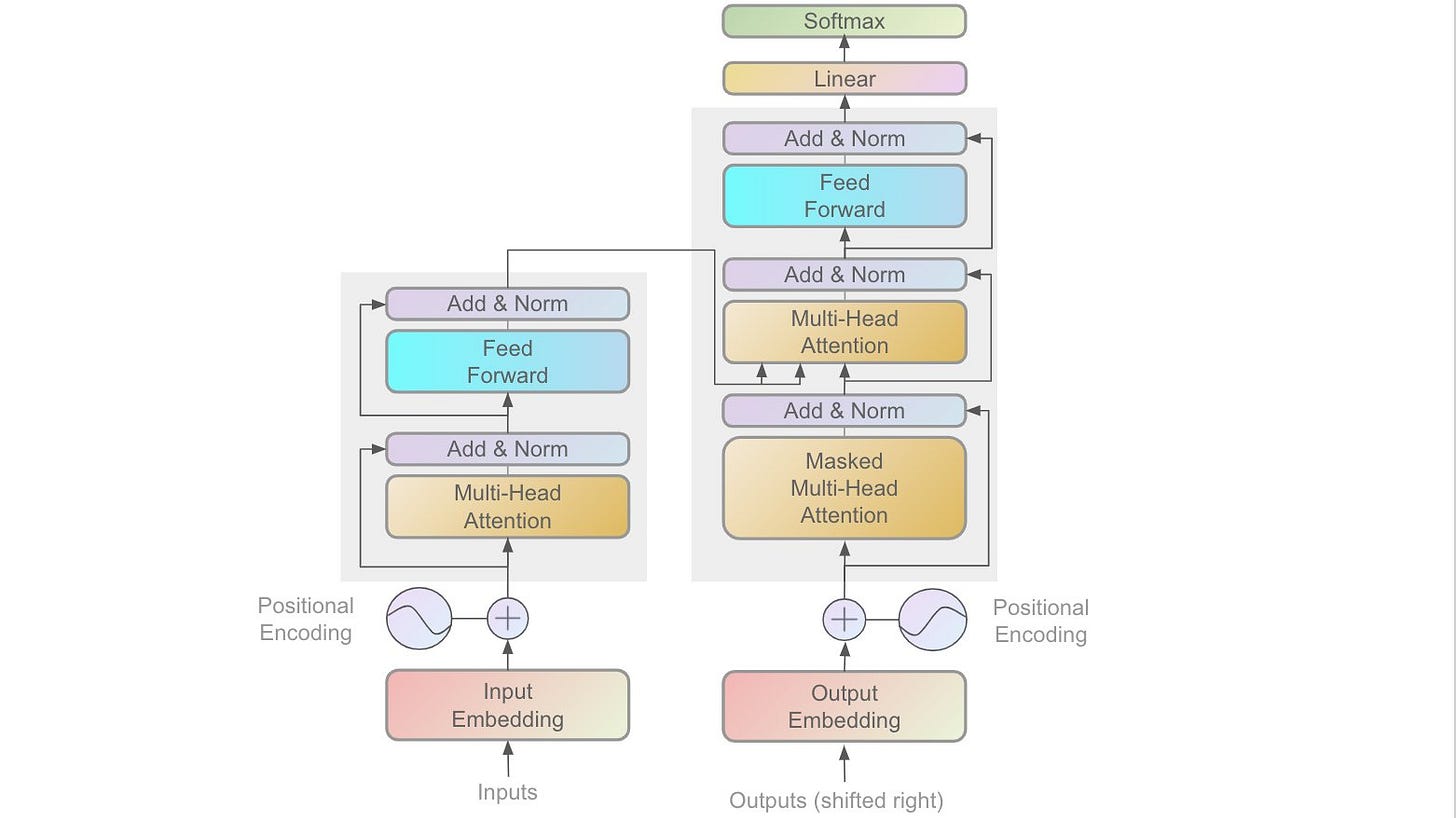

🎨 The Illustrated Transformer

Jay Alammar's illustrated explanations are exceptional. Once you get that high-level understanding of transformers, you can jump into this popular illustrated explanation of transformers:

🔗 http://jalammar.github.io/illustrated-transformer/

Figure source: http://jalammar.github.io/illustrated-transformer/

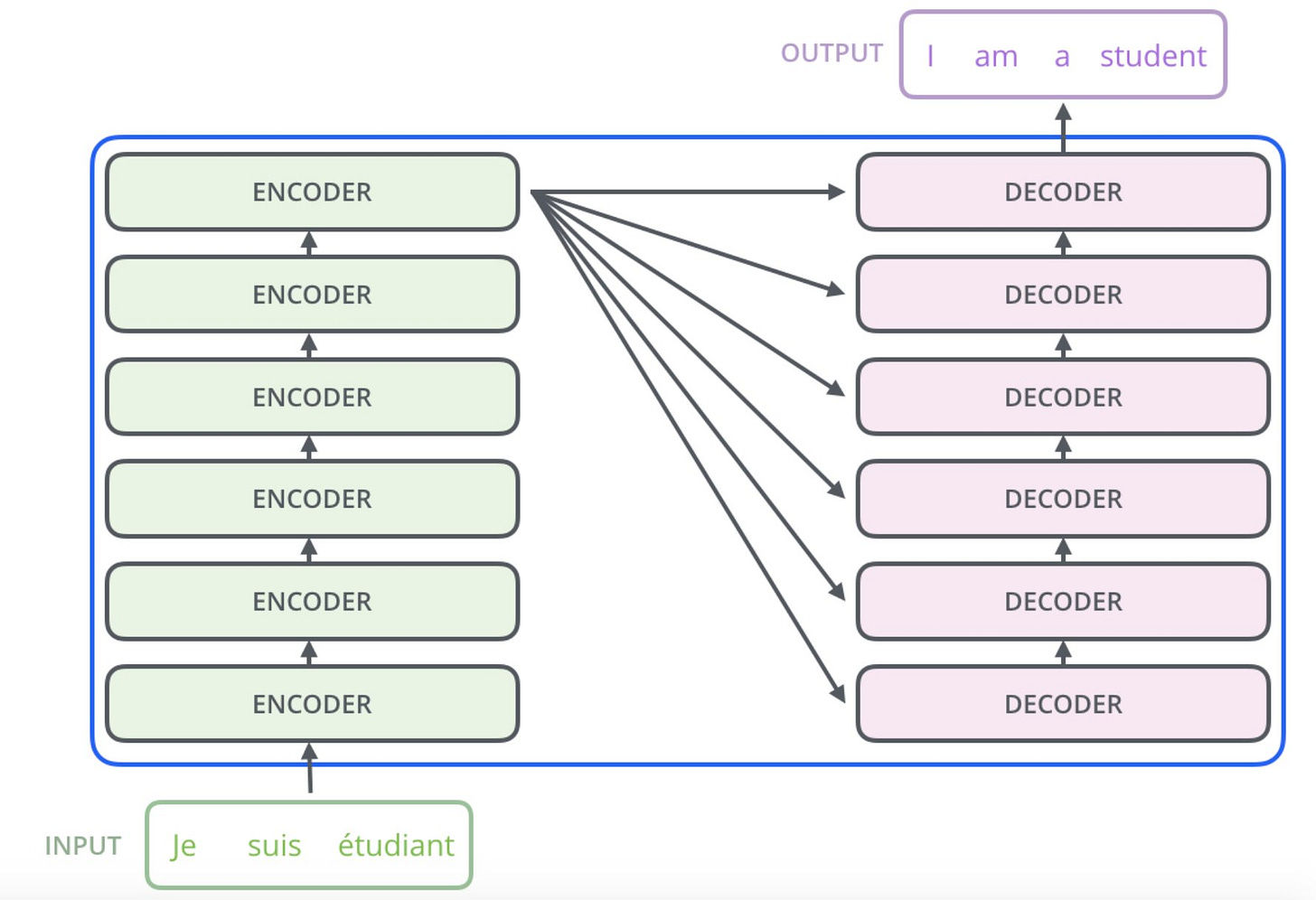

🔖 Technical Summary

At this point, you may be looking for a technical summary and overview of transformers. Lilian Weng's blog posts are a gem and provide concise technical explanations/summaries:

🔗 https://lilianweng.github.io/lil-log/2020/04/07/the-transformer-family.html

Figure source: https://lilianweng.github.io/lil-log/2020/04/07/the-transformer-family.html

👩🏼💻 Implementation

After the theory, it's important to test the knowledge. I typically prefer to understand things in more detail so I prefer to implement algorithms from scratch. For implementing transformers, I mainly relied on this tutorial:

🔗 https://nlp.seas.harvard.edu/2018/04/03/attention.html

Figure source: https://nlp.seas.harvard.edu/2018/04/03/attention.html

📄 Attention Is All You Need

This paper by Vaswani et al. introduced the Transformer architecture. Read it after you have a high-level understanding and want to get into the details. Pay attention to other references in the paper for diving deep.

🔗 https://arxiv.org/pdf/1706.03762v5.pdf

Figure source: https://arxiv.org/pdf/1706.03762v5.pdf

👩🏼💻 Applying Transformers

After some time studying and understanding the theory behind transformers, you may be interested in applying them to different NLP projects or research. At this time, your best bet is the Transformers library by HuggingFace.

🔗 https://github.com/huggingface/transformers

Feel free to suggest study material. In the next update, I am looking to add a more comprehensive collection of Transformer applications and papers.

I try to regularly maintain this guide. To get regular updates on new ML and NLP resources, follow me on Twitter.